“Crawling” refers to the process through which search engines like Google scan websites for content, information, and data in order to rank them in the search results. This is a critical step in Search Engine Optimization (SEO), because it means search engines are able to “read” your website! This is also where the importance of understanding “crawl budget” comes into play – no, it’s not about dollars, it’s the limit of how many pages Google is willing to crawl and index.

There are many things that can influence the extent to which search engines are able to crawl your site – referred to as, “crawl depth.” Optimizing the crawl depth of your website can open you up to more traffic, more users, and more actions taken on your website.

In this guide, learn what constitutes a good crawl depth, how to achieve this standard, and how to leverage it to drive more traffic!

What is “Crawl Depth” in SEO?

Crawl depth refers to the distance (in clicks) between your homepage and a specific page on your website. In other words, crawl depth measures how many times a user (or a search engine bot) would need to click through different pages on your website to reach that destination. It’s basically the hierarchical “level” of a page within your website.

Crawl depth is important for a few reasons:

- Websites with a shallower crawl depth (i.e. fewer clicks to the homepage) are crawled more efficiently, making it easier for all the pages to get indexed.

- Pages that are closer in clicks to the homepage more often benefit from the page authority and “link equity” from the homepage, which can also improve rankings.

- The fewer clicks it takes for users to find the information they’re looking for, the better User Experience (UX) you’ve provided to them!

A shallow page depth makes content easier to find and reduces the resources needed for Google to effectively assess, index, and rank all of your important web pages!

Does Google Have a Crawl Budget?

Yes – there are limits to how much time Google can dedicate to crawling a single website. Reducing crawl depth helps ensure that Google bots are able to crawl as many of your web pages as possible within the time/resources allotted.

In Google’s own words:

“… there are limits to how much time Googlebot can spend crawling any single site. The amount of time and resources that Google devotes to crawling a site is commonly called the site’s crawl budget.”

What does this mean for website owners?

This means that if you want Google to crawl all of your web pages (with the goal of getting them indexed and ranking), then you will want to reduce crawl depth as much as possible.

If Google expends all of its resources before being able to crawl your whole site, those remaining pages likely won’t get indexed, which will block the availability of organic traffic.

What is a Good Crawl Depth?

As a best practice, a good crawl depth ensures that all of your important web pages are no more than 3 clicks away from the homepage.

While there is no exact standard defined by Google, this is what SEO professionals have found to be most effective in getting as many web pages crawled and indexed as possible.

A crawl depth of 3 clicks or less helps ensure that search engine bots can efficiently crawl your website’s content, even if you have a high number of pages.

Exceptions to the “Rule”?

Striving for a 3-click crawl depth is a worthy goal for any business. That said, achieving this standard can be difficult for large websites; in particular, extensive ecommerce sites.

It is common on these sites to see a crawl depth of, say, 4 to 6 clicks from the homepage. This is because there are simply so many URLs to organize. Still, you should try to reduce crawl depth as much as possible by adding important pages to your site navigation and implementing robust internal linking.

How to Improve Crawl Depth on Your Website

So, how do you ensure that search engines are able to crawl all of your web pages without expending all of their resources?

You reduce the crawl depth on your website.

To do this, you will first need to assess your current crawl depth. Then, depending on this status, you will implement specific changes to reduce the number of clicks (from the homepage) as much as possible.

1. Analyze Your Current Crawl Depth

Analyze your website’s existing crawl depth using a crawling tool like Screaming Frog, which we talk about in our list of Top SEO Analytics Tools. If your website has over 500 URLs, you will need to pay for a premium subscription to the tool. If you use a different tool, make sure to choose one that can accommodate the total number of URLs on your website.

A few metrics to look out for include:

- Crawl Depth Distribution – A distribution of how many web pages are 0, 1, 2, 3, etc. clicks away from the home page. A high number of pages being 3+ clicks away from the homepage indicates unoptimized crawl depth.

- Orphan Pages – Any pages that are inaccessible via site navigation, meaning they are 5+ clicks away from the homepage or not linked anywhere on your site (but are still indexable).

- Broken Internal Links – Error codes indicating that any of your internal links (from one page on your site to another) are broken.

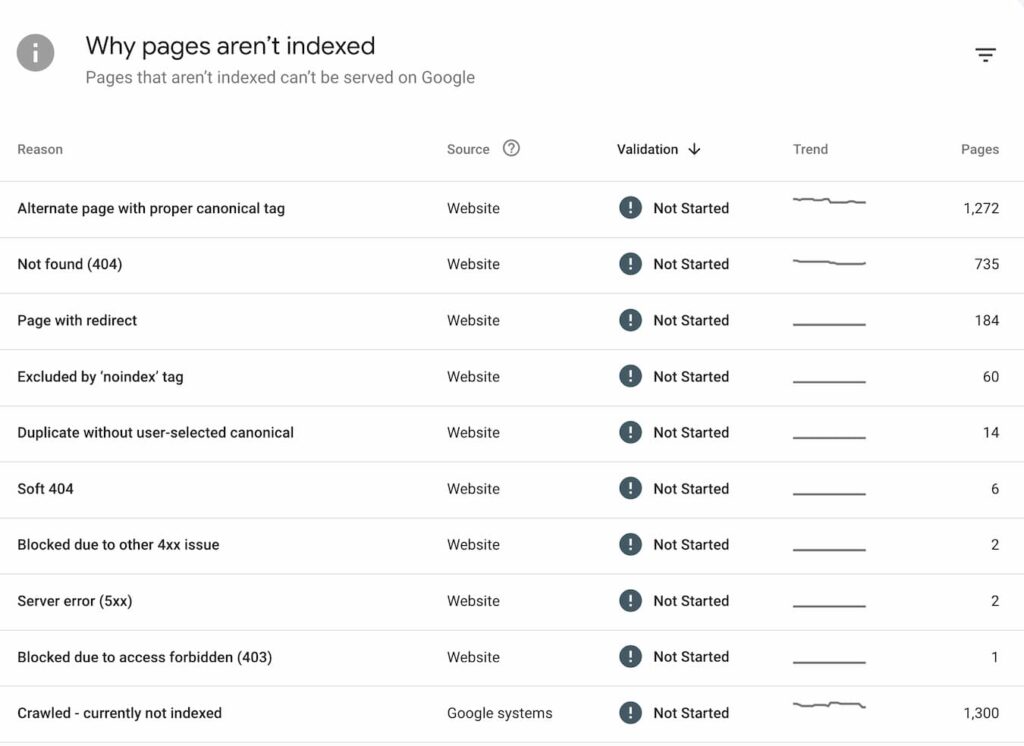

You should also review Google Search Console’s “Pages” report to see the distribution of pages that are indexed versus not indexed. Google Search Console will also tell you why a page isn’t indexed, such as the page being blocked by robots.txt, a page resulting in a 404 (broken) code, the presence of a server error (5xx), etc.

2. Compare to SEO Best Practices

Depending on the severity and cause(s) of your page depth issues, your site might require minor changes or a larger overhaul.

For example, if the majority of your pages are around 4 clicks away from the homepage, and these pages easily relate to categories (like Services or Products) on your website, you might be able to remedy this easily with internal links or adding them to the main navigation.

If, however, you have many orphan pages and/or pages are 5+ clicks away from the homepage, you may need to completely rethink your URL and internal linking structure.

Here are a few best practices to consider and implement:

- Update your main navigation to include your most important pages and page categories.

- Identify orphan pages and add links from existing pages to these pages.

- Add internal links from existing pages to “deeper” pages and relevant blog posts to reduce page depth.

- Review the “Pages” report in Google Search Console and follow Google’s recommendations for resolving the respective indexation issues.

- Redirect broken/404 pages from the old URL to a different, live URL.

- Consider adding breadcrumbs to the top of blog posts and web pages.

- Update your XML sitemap and robots.txt settings to ensure no important pages are being blocked from search engine crawlers.

- Fix broken internal links by updating them with relevant, live links.

- Consider adding an HTML sitemap, linked in your footer, to offer both users and search engines a quick layout of your site’s structure.

- For ecommerce and/or large websites, consider nesting products or various pages into Categories that can then be linked from your main navigation.

3. Optimize Your Crawl Budget

Google Search Console will show you the number of pages that are and are not being indexed on your site. If fewer pages are being indexed than are not being indexed, or the vast majority of all your website pages aren’t being indexed, you may have a crawl depth issue.

Optimizing your crawl depth involves making sure all of your web pages are easily accessible by search engines and users. This may require implementing a completely new hierarchy of pages. It will likely include adding more internal links, implementing breadcrumbs, and resolving 404s.

Using these practices, you can greatly improve your website’s crawl depth, leading to better SEO performance and a better user experience!

4. Track and Review Performance

We recommend reviewing the “Pages” report in Google Search Console on a regular basis to review your progress. Over time — assuming you implement the best practices above — you’ll likely see more and more pages getting crawled and indexed!

Also, as you add new pages, be sure to implement intentional internal links. If you delete pages, set up redirects in their place and otherwise replace these internal links with other relevant sources.

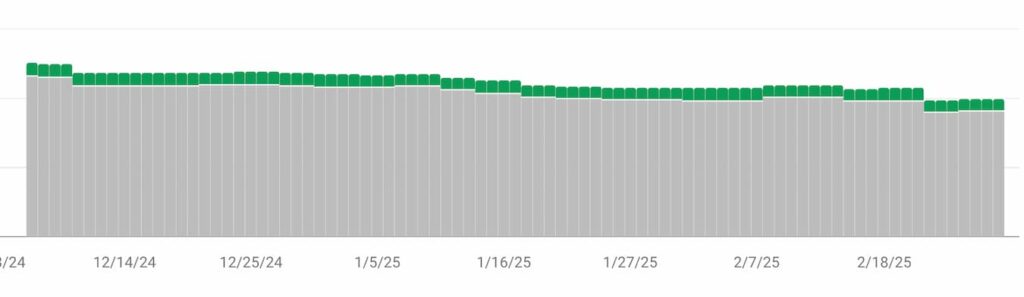

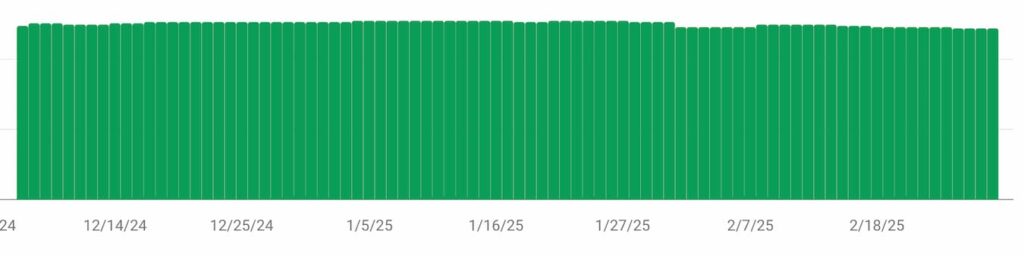

Over time, hopefully your indexation report will go from this…

To this …

We get it — staying on top of all things SEO can be time-consuming. From crawl depth to backlinks to internal linking, there are a lot of items to consider! That’s why we at Hennessey Digital help business owners like you tackle these tasks efficiently and effectively!

Many law firms face extensive crawl depth and indexation issues. As part of our work together, we will do a robust SEO audit to identify any and every issue. Most importantly, we will resolve these issues to get you better indexation, traffic, and leads!